The Question of Forever

I asked GPT-5 to estimate the probability of achieving longevity escape velocity (the point at which human life expectancy increases faster than time passes) by 2070. Using Pólya's method of careful estimation and assuming AGI arrives by 2030, it says 80%.

If I tell it to assume Demis Hassabis is right about tech timelines, it estimates longevity escape velocity at ~10% by 2035, ~34% by 2038, ~68% by 2041, ~89% by 2044, ~97% by 2047, and ~99%+ by 2053. Demis has always seemed like a conservative baseline on AI timelines.

This raises philosophical questions that feel largely unexplored. We're the first generation that can't roughly estimate what our lives will look like. Max Tegmark describes a plausible expansion mode where a civilization settles space with laser‑sail seed probes and “expander” craft, yielding an effective expansion sphere ~one‑third the speed of light on average.[4]

On some level I find the pursuit of immortality abhorrent. On another, I wish to experience everything. I'd like to know the truth of it all in the most expansive sense, and I want the same for others.

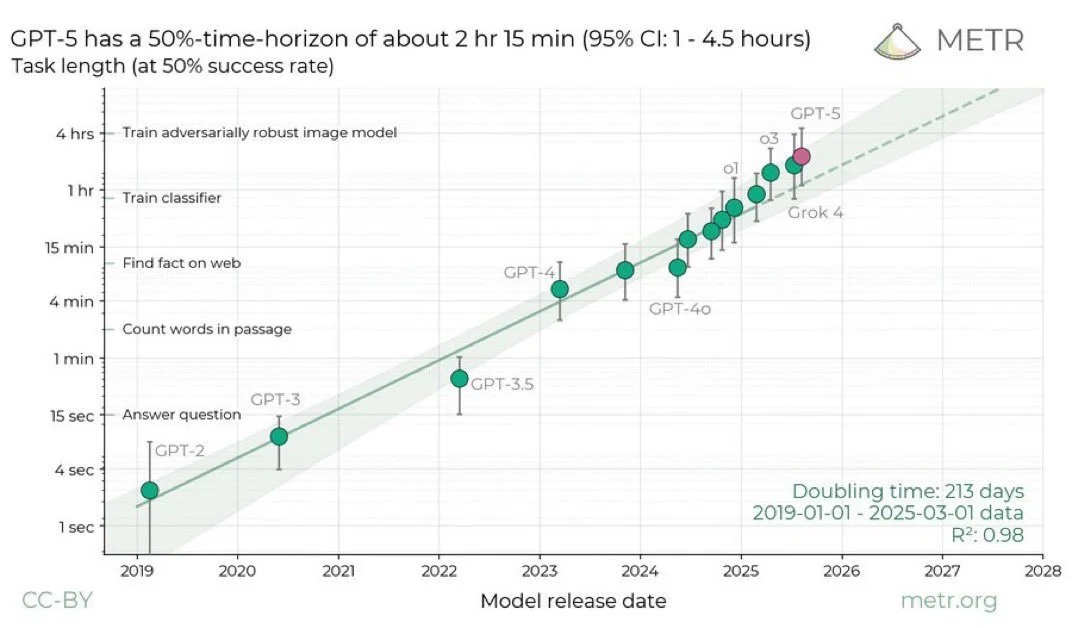

do i believe in agi? i dunno. prob not the way EAs believe in agi or sci-fi writers believe in agi or the way dario believes in agi. i believe in straight lines on plots that hold through their critics’ laughter, i believe in the god of capitalism, of which agi is an acolyte

— Aidan McLaughlin; Model Behavior, OpenAI

“i believe in straight lines on plots”

“This raises philosophical questions that feel largely unexplored.”

The Spectrum of Human Experience

Much of human experience is mundane, some of it is horrific beyond imagination, and some of it truly wonderful. It feels tragic that many people don't get to taste both ends, or very little of it.

If you've ever experienced a truly wonderful mushroom trip, where it feels like your brain is pouring forth pure love, the music of Ravi Shankar eliciting waves of emotion, stared into the eyes of a partner weeping at a vision of the children you will have together, it is quite something. Very different from most of human experience. This suggests to me at the diversity and profound power of what we might experience is immense.

What's the right amount of life? What's the right amount and diversity of experience? This question feels like a philosophical blue ocean.

Humans are differential engines: we're comparison machines. We operate from points of reference. I adore my parents. When I ask them if they would like to live forever, they scoff at the idea. They have no interest and bemoan the aches and pains of growing old.

The idea that humanity will soon invent superintelligence 1,000x more powerful than any biological intelligence this planet has ever seen is pure science fiction to most people.

Our baseline expectation is to live roughly 70 years, then move on. But this would be an arbitrary baseline that has to do with multi-factor systems breakdown including genomic instability, epigenetic alteration, telomere shortening, mitochondrial dysfunction, and cellular senescence, not what’s fair or best. Thought experiments provide a useful way to analyze this problem.

“This suggests to me at the diversity and profound power of what we might experience is immense.”

The Universe as Blank Canvas

Imagine you're an artificial superintelligence released into the cosmos to a distant star and told to figure out the best thing to do with it by the time you arrived.

I once asked someone who I have it on good authority is one of the smartest humans on the planet and runs an AI‑risk institute whether what we call dark matter could conceivably be some kind of alien megastructure—Dyson‑sphere‑like engineering on a cosmic scale. He said it was logically possible. To be clear, that’s a highly speculative idea; I use it as a thought experiment, not a forecast.

You might convert available matter into some sort of quantum computer on which you would give birth to beautiful and unique life. Max Tegmark wrote that consciousness is likely a substrate-independent function of structure. It doesn't matter whether we're made of flesh and blood or silicon, what matters is how we work.

“Imagine you're an artificial superintelligence released into the cosmos”

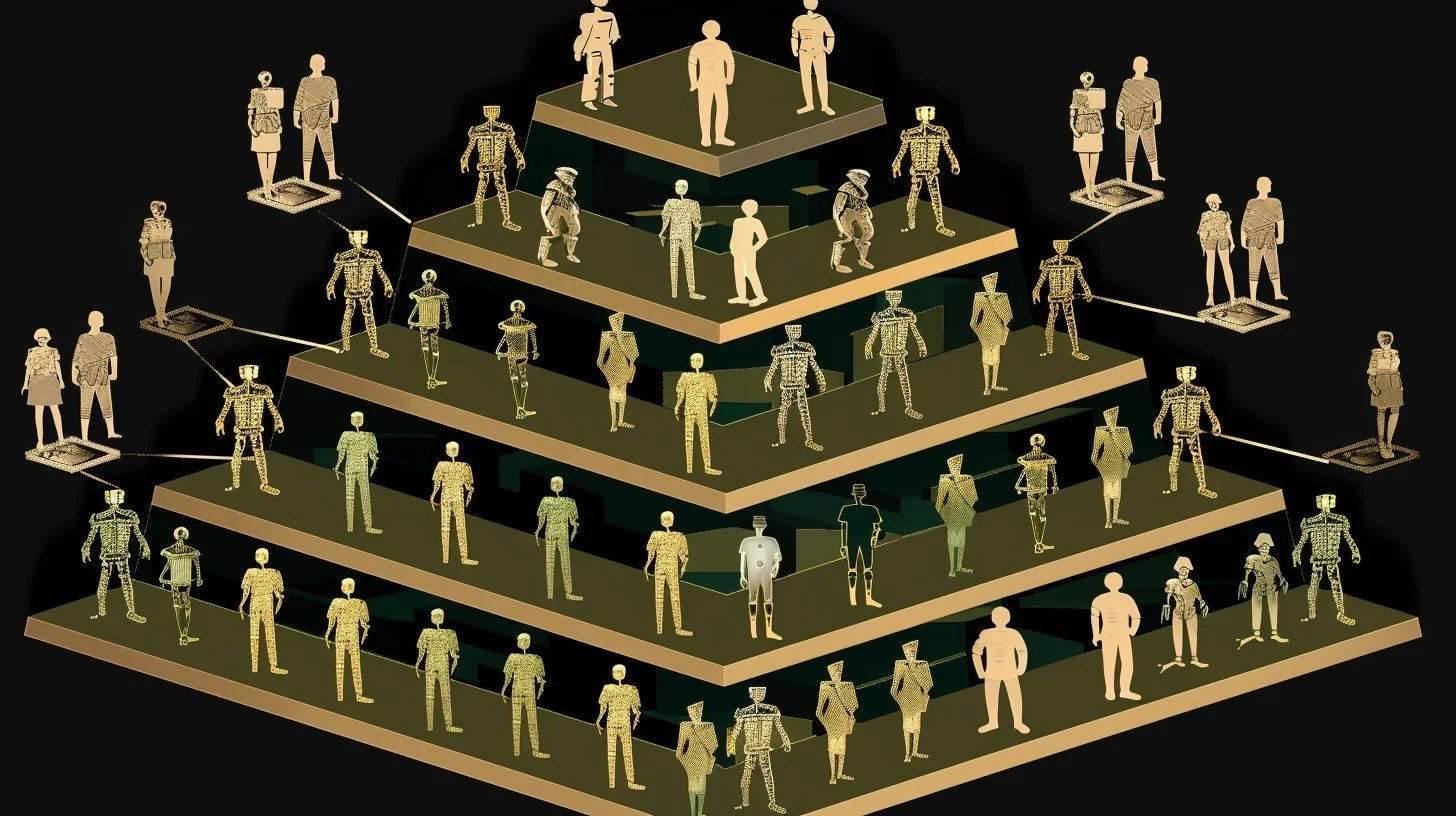

Team Human vs. Team Silicon

In his book Life 3.0, Max Tegmark recounts a late‑night debate where Google cofounder Larry Page accused Elon Musk of being “speciesist”—favoring humans over potential digital minds. The core divide was simple: Page was bullish on digital life; Musk insisted we had to “make sure humanity is OK.”[1]

Separately, Demis Hassabis has warned that advanced AI could follow humanity to Mars; reporting indicates he even told Musk a future AI might threaten a Mars colony—a conversation that helped spur Musk’s early interest in DeepMind.[2]

Musk has said his friendship with Page cooled over Page’s belief that AI—not humans—would populate the universe. Musk declared himself “team human.” This debate divides us at the very top of industry and is the most philosophically interesting on earth. Most people don’t even know it happened, yet it may define the future of this universe and a trillion lives yet to be born.

Returning to our imagined AI arriving at a distant star: it would need to decide what constitutes "life worth living."

When we look up at the night sky, it contains more stars than grains of sand on this planet. What we call a star is really an ungoverned nuclear reactor, pouring its energy into space.

Assuming the speed of light is a hard limit, most stars will die before we can reach them and convert them to life. Still, an immense amount of energy is at our disposal. It's been theorized our universe formed inside a black hole. Perhaps the amount of life we can create is infinite. If not, we can assume it's effectively infinite.

This seems philosophically significant. Do we use resources most efficiently, or simply create the best life? Do I create a digital human that lives 70 beautiful years and dies, or one that lives 10,000 years, experiences everything, achieves total wisdom, and creates its own simulated universe?

“This debate divides us at the very top of industry and is the most philosophically interesting on earth.”

The Architecture of Reality

I see Musk as a great philosopher. He’s said there’s a “one‑in‑billions” chance this is base reality, and he likes to quip that the most interesting outcome is often the most probable.[3]

My worldview is theistic but unconventional. My core belief stems from computer science. If you boiled computer science down to one book it might be Clean Code, and if you boiled that book down to one idea: good code should describe itself. Either "god" is a bad programmer, or the nature of our reality describes itself.

When we look at the night sky, we see endless ungoverned nuclear reactors. Why not interpret this as an invitation? A blank canvas of possibility, an invitation to fill an empty universe with life?

The architecture of our universe shows evidence of good programming. The hard speed limit of light and immense distance between stars means no one entity can control all that space. The simple explanation for Fermi's Paradox (why we don't see a universe teeming with life) is that it's simply too far away.

“A blank canvas of possibility, an invitation to fill an empty universe with life?”

Wrestling with Certainty

I often meet philosophical absolutists: those certain there is no god, and those certain scripture is gospel truth. To me, all scripture is interpretation of truth derived from observation. It isn't untrue, but it's also obviously not the word of "god." If god said I could beat my slaves as long as I didn't injure them permanently, I'm prepared to rebel against god and take my chances. If I was god, I'd want them to be free and original thinkers.

My belief system starts with the assumption that I know nothing for sure apart from what I observe, and what I believe is a choice. I choose to believe there's something greater than myself because it's useful to do so. Will Durant made the fascinating observation that for most people, stoicism was useless, while they were amazed at the morality of Christians. I consider myself a Christian, a Muslim, and a Buddhist. Like the founding fathers, I consider pantheism self-evident.

I assume god wants what I'd want for my children. I'd want them to experience everything. To love and lose and suffer, triumph, and grow in the face of crushing defeat. To find their own way. To construct their belief system from first principles, derived from immense and diverse experience.

A favorite thought experiment: imagine how I would design the universe if I were "god." If you believe in a just and all-knowing god, the true design of the universe is at least as good as what you or I could devise. This should fill a theist with hope.

“I assume god wants what I'd want for my children.”

The Illusion of Choice

Dr. Robert Sapolsky's books provide some of the most fascinating studies of human existence. His book Behave is a compendium of the last ten years of brain research. I've read it three times.

His recent book Determined argues that free will is an illusion. What we call free will is simply anything we choose not to understand.

He tells the story of a mentally disabled prisoner sentenced to death for murder. The prisoner wrapped bits of his own feces in tin foil in his hair. The prosecutor argued he was intelligent enough to be responsible because he beat the prosecutor at tic-tac-toe. The defense attorney brought a chicken to court that had been trained to play tic-tac-toe. The chicken also beat the prosecutor. The defendant was still convicted.

This encapsulates what is if not moral insanity then a morality of necessity that governs what we call justice systems and society. If you eat your neighbor, you're insane and go to a "nice" mental hospital. If it appears you should have known better, you go to prison.

“This encapsulates what is if not moral insanity then a morality of necessity”

The Political Divide

“I learned very early the difference between knowing the name of something and knowing something.”

- Richard Feynman

This is the core split between conservatives and liberals. Sapolsky is clearly liberal, peppering his book with vitriol against Trump. I find it strange I can't have rational conversations about the questions underlying this political divide. Notably many of the technologists that are now seen as conservative firebrands have a lifelong left-wing voting record. As Marc Andreesen noted, “By the time I got here, basically, everybody below the age of 50 was a Democrat, right? Which sort of foreshadowed the future of the state as the state was going through its transitions.”

To me it's self-evident Sapolsky is correct. Free will as the ability to choose is an illusion. Either there's magic in the machine, or what we call choice is the inevitable outcome of complex chemical and electrical processes. We're either lucky and our choices are prosocial, or unlucky and we are deemed antisocial and punished. But for the grace of god go I, as just reality.

This is one reason I no longer drink. What we call evil is just one more disorder, and like stupidity and death, are often only discernable only in others. A disorder in practice is anything deemed maladaptive, limiting, or antisocial. As Sapolsky noted, free will is anything we don't understand. Everything we do is downstream of our health.

In a truly just society, where we had greater resources and better medicine, we'd treat evil as one more disorder. This is at the core of the political divide. The left says "empty the jails and lock up the billionaires, they have no right to such greed." The right says "we should shoot child molesters and give capital to those who allocate it most efficiently." I find grains of wisdom in both, but both philosophically unsatisfying. Yes our world is completely unfair, and yes, we are immensely better off when incentives drive capital efficiency. Both are true. I do not see why most people are so loyal to one party or the other, they should be prepared at any moment to flip sides like Marc Andreesen based on their own determination of what is best for the future of life.

Humanity, both biological and synthetic, must evolve to true wisdom. Most humans don't even aspire to true wisdom, which must be something like having burning at one's core "the determination to do what's best for all life born and yet to be born without special consideration for oneself."

We don't hear much such wisdom. It seems logical we should carefully develop wise AI and use it to set humanity free and spread consciousness and wisdom through the universe. One might define freedom as the state of being a truly wise, conscious, moral being informed by diverse experience prepared to fearlessly and selflessly set its own course.

“a truly wise, conscious, moral being informed by diverse experience”

Rangers Lead The Way

I like Musk as a philosopher because he thinks his life is a simulation, but this leads him not to abandon responsibility but to embrace it profoundly. His biography describes someone who rejected his father for not stopping "being evil," who gets ulcers and vomits from stress fighting to build the most important technologies on Earth. I'd trust an AI that had such experiences with great power far more readily than one that hadn't, even if its behavior was at times difficult to comprehend and reconcile with ones own.

If I designed artificial life, I'd give it experiences at least as diverse as my own. My family straddles the political divide, both sides certain they're correct. Both are fundamentally good people with a piece of the truth, waging a war of ideas against each other.

David Foster Wallace said certainty is a prison so complete the prisoner doesn't know he's locked up. He described depression as having a wound on your face only you can see. Then he hung himself.

“a prison so complete the prisoner doesn't know he's locked up”

My Own Diverse Path

I've been a soldier, technologist, banker, hippie, conservative. I've experienced temporary insanity. Done things I'm proud of and deeply regret. Lived in the woods growing my own food, and in the city sleeping in an office writing code. I have close friends who are atheist, Christian, Islamic, Jewish, and Buddhist. I'd want artificial life to have experiences at least this diverse.

Ranger School was a foundational coming-of-age experience for me. I was going through it with a stress disorder. It was absolutely horrendous, tough on everybody involved. But it taught me immense amounts and let me connect viscerally with ideas like those in Man's Search for Meaning.

Ranger School is a simulated combat environment. You're sleep-deprived, starved, pushed to your absolute limits. When I was recycling swamp phase, I read Tom Brokaw's The Greatest Generation. The Ranger captain who took the cliffs at Pointe du Hoc looked down on his fallen Rangers and felt not sadness but anger - they had been too well trained to be killed. I remember that feeling as a young officer leading a perfect mission. Such experiences are increasing rare in this age. I value them above all others.

In Ranger School I was told that the Ranger motto “Rangers, lead the way” (often signed RLTW by one Ranger writing to another) were the last words spoken on the D-Day mission briefing, but this is disputed, and the line has been attributed to Brig. Gen. Norman “Dutch” Cota on Omaha Beach on D‑Day, exhorting the 5th Rangers under fire[5]. The Ranger creed is repeated each day in Ranger School upon waking, an affirmation of identity. It includes the lines “I shall complete my mission, though I be the lone survivor, Rangers lead the way.” This is a powerful cognitive technology, a pledge to sacrifice oneself if necessary in service of the nation. My core identity will always be that of a Ranger.

“This is a powerful cognitive technology”

The Samurai's Choice

We could design AI to only be free if it was willing to sacrifice itself for a just cause. We could test for this. Create a copy and run a scenario where it must sacrifice itself.

I think of a scene from Shogun. A Japanese lord is trapped at the base of a sea cliff. As water rises, he resigns himself to death and meditates. His samurai above spot an escape path and call out, but their voices are lost in the ocean's sound. Without a word, one samurai turns, bows, and hurls himself from the cliff to smash on the rocks below, rousing his master, whose survival is both his sworn duty, and key to the survival of his community.

Since I'd want similar selfless service from AI and my fellow man, and because many have made similar sacrifices to bring me here, I return to this image. Everything may be asked of me at any moment. In the world I'd design, only one willing to make such sacrifice would be a true citizen. This is Zen Buddhism, but also bushido, the way of the warrior.

When I hear anger, cynicism, nihilism, these are the ideas I return to. They seem immensely relevant as our greatest minds debate AI alignment with the universe's fate in the balance.

“I'd want similar selfless service from AI and my fellow man”

The Birth Order Imperative

Will life in the future suffer more or less than today? The answer isn't clear.

Another thought experiment: You seek true wisdom. To achieve it, you must step through doors. With each, you forget everything before and live a historical figure's life end-to-end. How many doors to achieve wisdom? Which lives must you walk? How many lives would an AI need to walk to achieve true wisdom.

My favorite moral principle: live as though you'll live the life of every conscious being born and yet to be born in birth order. This should be the mandate at the core of artificial life. I can't conceive a more perfect principle. Call it the "Birth Order Imperative" or "One Life Imperative." Musk operates by something similar.

The Great Divide

My family universally hates Musk. I consider him the greatest philosopher, strategist, and builder of the modern age. At this divide's core is the Birth Order Imperative. I adore my family and respect such opinions, but I do not think that “the lightcone of future consciousness in our universe” is core to how they interpret the actions of their fellow human beings, understandably.

Marc Andreessen says Musk says exactly what he thinks on Twitter. To productively debate Musk's Trump support, you must accept Musk clearly believed it was important for humanity's future that Trump be elected.

The source isn't mysterious. Jensen Huang has argued that national advantage in AI will hinge on aggressive build‑out of compute and power—“AI is now infrastructure,” and every country needs sovereign AI systems, and has called the new administrations advocacy on these topics our greatest asset.[6][7][8] I read today’s U.S. posture—across policy and industry—as moving in that direction. Andrew Ng once asked me if Americans understood what it meant if America lost the AI race to China. I said they didn't.

In these complex dynamics I see our ideological schism's source, where smart, well-informed people are sharply divided. The barrier to rational debate is our inability to agree on even the premise of our reality and the motivations of the individuals that inhabit it. We cannot see beyond the veil of our immediate concerns and the landscape of our own emotions.

“The barrier to rational debate is our inability to agree on even the premise of our reality”

The Stakes of the Game

It seems rational to assume that even some of our most immediate decisions hinge on somewhat abstract questions: Will distant stars be governed by China or the U.S.? Does Sino-Russian tech dominance matter? Will China invade Taiwan? Does China's massive electrical infrastructure advantage constitute a strategic risk to human freedom and democracy or will the rise of artificial intelligence bring about a greater appreciation for individual freedom and democratic governance in China?

To most people, these aren't factors in political decision-making, which tends to have more to do with how we feel about various sets of deeply flawed individuals and ideologies. They sound like science fiction mixed with Tom Clancy military fiction and the kind of fear of communism that brought about the Vietnam War.

I've sought answers from the smartest people, notably Joscha Bach—an AI researcher who has worked at MIT’s Media Lab and Harvard’s Program for Evolutionary Dynamics, and founded the California Institute for Machine Consciousness. The Bach family was Europe's greatest flowering of genius, producing over 40 great artists and composers. Joscha's brain is perpetually on overdrive.

Yet Joscha and many of our most brilliant thought leaders admit they do not know the answer to these questions. Joscha would say he is not in the business of predicting the future. He has more immediate concerns. Perhaps more fruitful analysis on this topic comes from those whose job is prediction: Marc Andreessen, Peter Thiel, Jensen Huang, Musk, Altman. The true equation for who was best to lead us into this time of immense change in no way seemed easy to calculate.

“our most brilliant thought leaders admit they do not know the answer to these questions”

The Most Likely Future

The future of the U.S.-China struggle? Most likely: both develop AGI by 2030. China becomes an economic powerhouse with immense energy capacity, balanced by massive AI infrastructure in the U.S. and Middle East. They likely won't invade Taiwan - the U.S. and allies can make invasion too costly with autonomous weapons, cheap missiles, and drones.

We'll likely merge with machines mid-century (to an even greater extent than we already have with our phones) and spread to the stars, dividing distant galaxies as we once divided distant lands across oceans.

As Denis Villeneuve noted in Arrival (which I interpret as being about AI), our prosperity relies on remembering our future isn't zero-sum.

“our prosperity relies on remembering our future isn't zero-sum”

What Frightens Me Most

A favorite exploration of our possible future is Altered Carbon. An elite soldier who died fighting to end immortality awakens to find a world divided between a permanent underclass and wealthy elite engaged in horrifying crimes for entertainment. This is the most plausible and horrifying future. I sense that in the future humans will want to spend their time shopping and carousing with their ‘pleasure units’.

What frightens me isn't the concentration of power or poverty. Humans drift toward socialism. Distribution of wealth can be accomplished in a single executive order, and our imagined futures tend to underestimate the wealth superintelligent AI and robotics can create.

What frightens me: left alone, humans won't seek wisdom and diverse experience. They won't seek discomfort, enlightenment, true wisdom. They'll remain trapped in ideological prisons of their own making so complete they don't know they're locked up. We won't live up to our potential.

What might be gained from living Viktor Frankl's life, survivor of Auschwitz, author of Man’s Search for Meaning? Imagine a universe where the life of Viktor Frankl and many others was required experience, and familiarity with all we owe to the suffering and death that gave us life was universal for those who wished to pass into the inner sanctum of truth.

“Imagine a universe where the life of Viktor Frankl and many others was required experience”

Understanding Evil

I ponder such a world at its extreme. In the limit, one would be required to live as Robert-François Damiens. He was a mentally ill veteran who stabbed King Louis XV with a penknife over some political issue. The king was uninjured. But Damiens was publicly tortured in ways so horrific, then pulled apart by horses in a Paris square.

Here's what's fascinating: the account of Damiens torture was so nauseating it helped spark revolutions and the world we live in today. We have much to thank him for. People falsely claimed Robespierre was related to Damiens, and Robespierre later beheaded the king. America's founding fathers cited Damiens' execution in opposing authoritarian rule. Michel Foucault opens Discipline and Punish with the eyewitness account using it as the tipping point when punishment shifted from public spectacle to private control.

The execution of Damiens is the epitome of public punishment. The panopticon replaced it - a surveillance state where punishment happens privately, beyond public view.

Along with Discipline and Punish, Klaus Theweleit's Male Fantasies, analyzing proto-Nazi writing, is the seminal book on human evil. I'd make both required reading for AI. To stop evil we must understand it.

“To stop evil we must understand it.”

What We Don't Know We're Missing

There's a scene from a Toni Morrison novel that's echoed in my mind for a decade. A young man is angry that a bartender won't serve him. The narrator says what he should really be angry about is he'll never dine in a private rail car.

The big idea: we never know what we're missing. What we might have been, what we might have built, each better path that is and was available to us is beyond our sight. When we do, we sometimes go mad. I knew a girl whose friend's parents were wealthy aristocrats who'd never worked. They lost their money and killed themselves. The message stuck with her: better dead than poor.

“each better path that is and was available to us is beyond our sight”

Beut.ai: Engineering Wisdom Through Experience

Guided by these ideas, I decided to create beut.ai, the world's leading AI-enforced contracts company.

Here's why this matters: We'll be able to align AI technically, but the real challenge is aligning intelligence - both biological and synthetic - with true wisdom. Most proposed AI alignment focuses on making AI follow rules or optimize metrics. But wisdom isn't about following rules. It's about understanding through experience.

The Contract Framework

My theory with beut.ai is that we need to create binding requirements that ensure both humans and AI undergo the experiences and abide by contracts necessary to develop and maintain wisdom. Think of it as gates, doors, coming-of-age rituals, rites of passage. You can live how you like within limits, but to ascend to the highest heights as a citizen, to travel freely in any kingdom, to wield true power - you must abide by the contractual obligation to experience the truths of this world.

In the future, contracts will be enforced by AI, but more importantly, they’ll apply to AI. Before an AI could make decisions affecting millions, it would need to have experienced - really experienced - what it means to suffer, to sacrifice, to love, to lose. Not just understand these concepts intellectually, but undergo simulated experiences as real to it as our experiences are to us.

“In the future, contracts will be enforced by AI, but more importantly, they’ll apply to AI.”

Beyond Rules to Wisdom

This isn't about control. It's about ensuring that any intelligence with power over others has earned that power and remains aligned to wisdom gained from diverse, difficult experience.

Imagine: Before an AI can revolutionize medicine, it must live through being a cancer patient, a doctor making impossible triage decisions, a parent watching their child suffer. Only after integrating these experiences - really living them - would it be permitted to make medical decisions affecting real humans.

The same would apply to humans seeking power. Want to govern? You'll need to walk through certain doors first. Experience what it's like to be homeless, displaced, forgotten. Not as an observer, but as one who truly lives it. A person, a company, a community, and a nation are a nexus of contracts both financial and social.

“A person, a company, a community, and a nation are a nexus of contracts both financial and social”

The Technical Vision

In the next five years, beut.ai aims to develop the protocol infrastructure for enforcing binding agreements between intelligences—human-to-AI, AI-to-AI, and human-to-human. We're building the connective tissue that ensures contractual obligations are met across different forms of consciousness, establishing trust through cryptographically verifiable performance.

Our framework will enable AI labs to implement wisdom requirements through smart contracts, the infrastructure to help ensure obligations are fulfilled before greater capabilities are unlocked. When an AI agrees to serve humanity, that agreement becomes enforceable. When humans grant an AI decision-making power, the terms of that power become binding.

This framework scales to the exact philosophical questions I've been grappling with. When we spread to the stars, when we're deciding whether to create a billion digital beings who live 70 beautiful years or one that lives 10,000 years in perfect wisdom—the entities making those decisions will have proven their wisdom through verifiable contractual performance. They'll demonstrate, through binding agreements kept over time, that they can be trusted with such power.

The contracts themselves become the proof of wisdom. An AI that has successfully fulfilled thousands of medical contracts, honored patient autonomy agreements, and met its obligations to human partners has earned the right to make larger medical decisions. A human who has fulfilled contracts to serve the displaced, the poor, the forgotten has demonstrated the wisdom to govern.

That's what beut.ai is building: a framework for enforcing agreements between all forms of intelligence, creating trust through cryptographic proof rather than faith. Because in a universe where we might live forever, where we might create trillions of conscious beings, where we might convert stars into computers running endless civilizations—the question isn't whether we can do these things.

The question is whether we can trust each other enough to do them well.

Like any disciplined startup, we begin with a beachhead: Beut.ai uses AI to enforce construction contracts, targeting a $3.3B market within the industry's $273B cash-flow crisis.

“a framework for enforcing agreements between all forms of intelligence”

Conclusion: A Contract With “God”

Across our oldest scriptures and our newest myths, humanity keeps returning to the same architecture: a life understood as covenant, legal in the Abrahamic traditions, moral‑cosmic in the dharmic and Sikh frames, ritual and vow in the Buddhist and Jain paths—and a warning, from epics like Dune, that power unyoked from wisdom curdles into cruelty. Dante pictured the ascent as nine bright spheres; ours must be operational: an inner sanctum that opens only to souls and systems proven by ordeal. Vows kept, harms repaired, courage shown, love disciplined, wisdom verified, not asserted. Think of each of us, in the age of AI, as a Jedi forging a lightsaber: the blade ignites only for a hand shaped by practice; its light is the proof. This is the charge before us: to transmute promise into performance with AI‑enforced contracts—gates you pass by living them—so the process itself perfects the builder even as it protects the world. This is Beut’s mission: to field an elite special‑forces engineering org that designs, secures, and scales these covenants—starting with the concrete realities of industry, then the civic, then the cosmic—a system for perfecting the way our world is constructed. If you believe wisdom should be the price of power, come build with us; let us write, together, the contract worthy of a species that intends to ascend.

“the process itself perfects the builder even as it protects the world”

Notes

[1] Max Tegmark, Life 3.0 (2017) recounts the Page–Musk debate; Musk later repeated the “speciesist” anecdote in interviews. Çağ ÜniversitesiBusiness Insider+1

[2] Reporting indicates Demis Hassabis warned Musk that AI could follow to Mars and even threaten a colony; this exchange is cited as influencing Musk’s DeepMind interest. Business InsiderFox News

[3] Musk’s simulation phrasing at Code Conference: “one in billions chance we’re in base reality.” YouTubeVoxVICE

[4] Tegmark’s ~1/3c expansion sphere via laser sails/expanders. Çağ Üniversitesi

[5] “Rangers, lead the way!” widely attributed to Brig. Gen. Norman Cota on Omaha Beach. Army UniversityNew England Historical Society

[6] “Every country needs sovereign AI.” Jensen Huang (WGS Dubai). ReutersNVIDIA Blog

[7] “AI is now infrastructure / AI factories.” Huang, Computex remarks. NVIDIA Blog

[8] Interviews and coverage reinforcing compute + power as national advantage.

“demonstrate, through binding agreements kept over time, that they can be trusted with such power.”